In our previous blog, we took an in-depth look at AI hallucinations, including what they are, why they matter, and how they can be measured. In this article, we’re going to look at some of the ways AI hallucinations can be controlled in order to ensure that large language models (LLMs) deployed in the life sciences space are delivering accurate, safe, and useful outputs.

Let’s dive in!

Three Methods for Controlling AI Hallucinations

There are many methods used to control AI hallucinations across various use cases, but for the purposes of this article, we’ll focus on the three methods most relevant to the life sciences.

Method 1: Retrieval Augmented Generation (RAG)

As we’ve discussed in the blog measuring AI hallucinations, LLMs hallucinate for a number of different reasons, but the two primary reasons are the fact that they are static or “frozen in time”, lacking up-to-date information, and the fact that they are most often trained for generalized tasks and therefore do not have access to private company data. This means they can lack domain-specific knowledge.

One of the methods that combats these issues is Retrieval Augmented Generation (RAG).

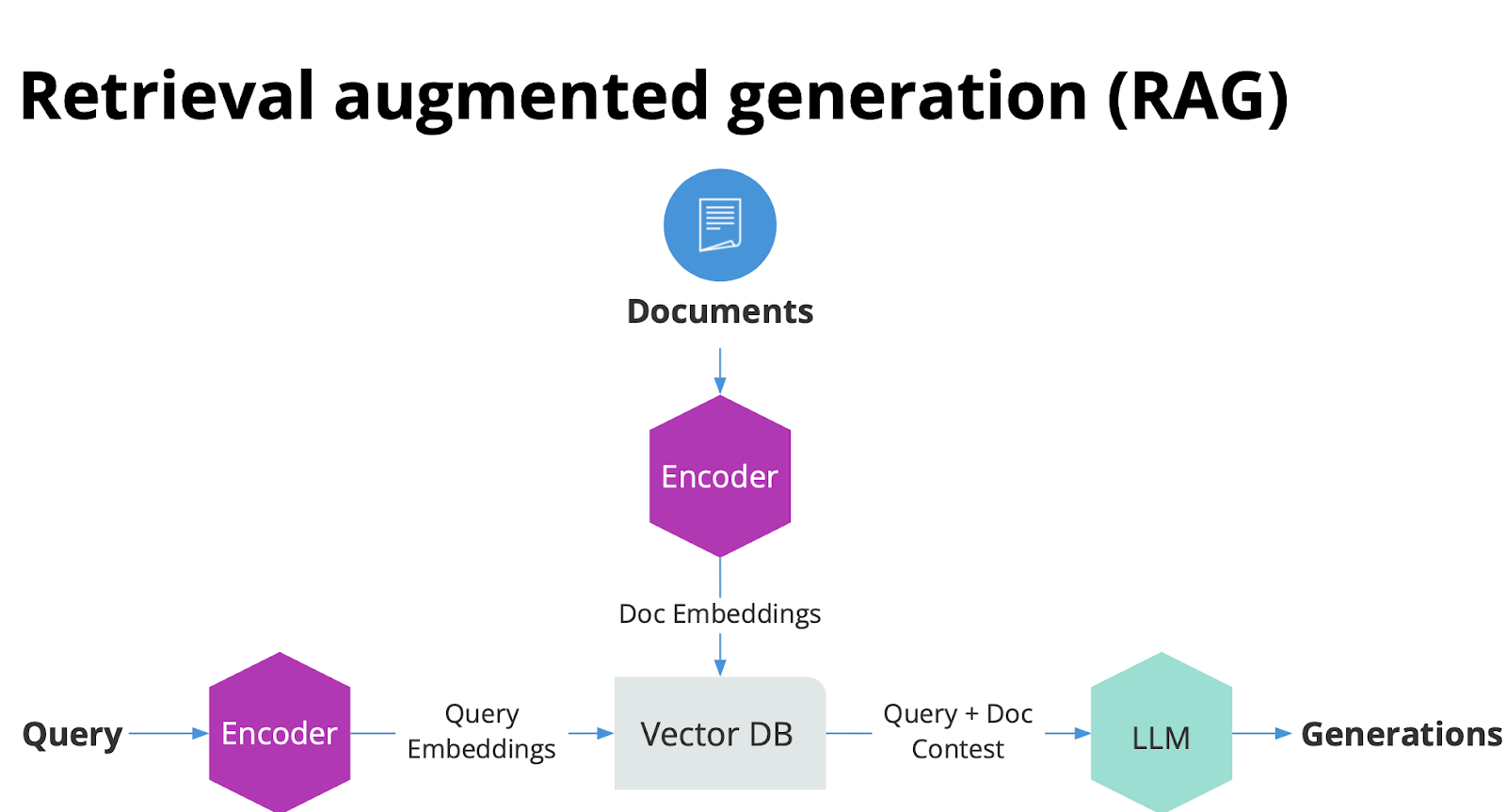

During this process, a bank of relevant documents is loaded into a deep learning model to produce a vector embedding for each document. These embeddings are then stored in a database.

The user enters a query into the deep learning model, which will generate a vector embedding for that specific query. By locating document embeddings that match closely with the query embedding, you can prompt the LLM to pull information from the relevant matching documents to answer the query. It will then read those documents and generate a summarized output that answers your question based on the information contained within those documents.

RAG helps reduce hallucinations because the method allows you to augment LLMs with updated and previously unavailable information, including private data. RAG offloads factual information from the LLM to the retrieval system, so the LLM only has to curate a response based on the contexts and content provided, which makes it easier for the LLM to reason successfully.

Chain-of-Verification (CoVe)

Another method used to control AI hallucinations is Chain-of-Verification (CoVe). This approach requires the LLM to plan and execute a set of verification questions that will be used to check its final output in response to a query.

The basic CoVe process involves four steps:

- Generate a baseline response: This involves inputting the query, then generating the response using the LLM. The resulting response may not be factually accurate.

- Plan verifications: Next, based on the query and the baseline response, the AI should generate a list of verification questions. These will be used to test the accuracy of the baseline response. Verification questions are usually simple questions with straightforward answers.

- Execute verification: This step involves prompting the LLM to generate responses to each of the verification questions in turn, checking them against the original response to identify inconsistencies and mistakes.

- Final response: Inconsistencies and mistakes are removed, and a final response is generated incorporating the verification results.

Let’s look at an example following the steps above:

- The query we input is, “Name some politicians who were born in New York, New York.” The LLM generates the following answers: Hilary Clinton, Donald Trump, Michael Bloomberg, etc.

- The verifications that would be appropriate to this query would be:

– Where was Hilary Clinton born?

– Where was Donald Trump born?

– Where was Michael Bloomberg born

- The LLM executes the verifications, answering the following:

– Hilary Clinton was born in Chicago, Illinois

– Donald Trump was born in New York City, New York

– Michael Bloomberg was born in Boston, Massachusetts

From these answers, we can see that there has been hallucinations in the baseline response, and ask the LLM to remove them.

- Final verified response. Incorporating the verification answers, the LLM is able to produce an accurate list of politicians born in NY, New York.

Human in the loop

Keeping the human in the loop is another way to mitigate the impact of AI hallucinations. In this approach, whatever the LLM generates is treated as a recommendation or suggestion which is presented to the user for verification.

For example, Saama’s Smart Data Quality solution, which uses GenAI to auto-generate data queries, presents pre-generated queries for human review. The user then approves, edits, or rejects the queries, and the data gleaned from this process can be used to further train and improve the LLM further down the line.

Unlocking the potential of responsible GenAI

The above methods can be used individually, but more often, they’re deployed in combination to ensure that LLM outputs are verified, accurate, and do no harm in order to fully realize the potential of GenAI in the life sciences space.

Within the Saama platform, industry-leading GenAI is carefully monitored for accuracy and veracity, and the human is always kept in the loop.

If you’d like to find out more about how we’re deploying GenAI to transform clinical development processes, book a demo with a member of our team today.